【Java大数据开发】 Springboot整合hadoop

1. 新建一个 springboot 项目,新增 hadoop 依赖。<!--hadoop依赖--><dependency>...

·

1. 新建一个 springboot 项目,新增 hadoop 依赖。

<!-- hadoop 依赖 -->

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-hdfs</artifactId>

<version>2.7.4</version>

<exclusions>

<exclusion>

<groupId>org.slf4j</groupId>

<artifactId>slf4j-log4j12</artifactId>

</exclusion>

<exclusion>

<groupId>log4j</groupId>

<artifactId>log4j</artifactId>

</exclusion>

<exclusion>

<groupId>javax.servlet</groupId>

<artifactId>servlet-api</artifactId>

</exclusion>

</exclusions>

</dependency>

<!-- https://mvnrepository.com/artifact/org.apache.hadoop/hadoop-common -->

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-common</artifactId>

<version>2.7.4</version>

<exclusions>

<exclusion>

<groupId>org.slf4j</groupId>

<artifactId>slf4j-log4j12</artifactId>

</exclusion>

<exclusion>

<groupId>log4j</groupId>

<artifactId>log4j</artifactId>

</exclusion>

<exclusion>

<groupId>javax.servlet</groupId>

<artifactId>servlet-api</artifactId>

</exclusion>

</exclusions>

</dependency>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-client</artifactId>

<version>2.7.4</version>

<exclusions>

<exclusion>

<groupId>org.slf4j</groupId>

<artifactId>slf4j-log4j12</artifactId>

</exclusion>

<exclusion>

<groupId>log4j</groupId>

<artifactId>log4j</artifactId>

</exclusion>

<exclusion>

<groupId>javax.servlet</groupId>

<artifactId>servlet-api</artifactId>

</exclusion>

</exclusions>

</dependency>

<!-- https://mvnrepository.com/artifact/log4j/log4j -->

<dependency>

<groupId>log4j</groupId>

<artifactId>log4j</artifactId>

<version>1.2.16</version>

<scope>compile</scope>

</dependency>

2. 在 application.properties 配置文件新增 hadoop 配置

# 通过在虚拟机执行 hdfs getconf -confKey fs.default.name 获取正确地址和端口号,注意替换成ip地址

hadoop.name-node: hdfs://192.168.101.111:8020

# 下面是创建hdfs文件夹

hadoop.namespace: /test1

3. 下载 hadoop 的包,解压包到指定目录下

整理好的 hadoop 的包下载地址 hadoop 包的下载地址,不然执行会报错:java.io.IOException: Could not locate executable null\bin\winutils.exe in the Hadoop binaries.

4. 在测试类中执行创建 hdfs 文件夹,上传,下载文件

@Value("${hadoop.name-node}")

private String nameNode;

@Value("${hadoop.name-node}")

private String filePath;

@Test

public void init() throws URISyntaxException, IOException {

//指定刚才解压缩hadoop文件地址

System.setProperty("hadoop.home.dir", "H:\\hadoop-2.7.2");

//1.获取文件系统

Configuration configuration = new Configuration();

FileSystem fs = FileSystem.get(new URI(nameNode), configuration);

//2.执行操作 创建hdfs文件夹

Path path = new Path(filePath);

if (!fs.exists(path)) {

fs.mkdirs(path);

}

//关闭资源

fs.close();

System.out.println("结束!");

}

@Test

public void upload() throws URISyntaxException, IOException {

System.setProperty("hadoop.home.dir", "H:\\hadoop-2.7.2");

//1.获取文件系统

Configuration configuration = new Configuration();

FileSystem fs = FileSystem.get(new URI(nameNode), configuration);

//2.执行操作 上传文件

fs.copyFromLocalFile(false,true, new Path("C:\\Users\\New\\Desktop\\test.txt"), new Path(filePath));

//关闭资源

fs.close();

System.out.println("结束!");

}

@Test

public void download() throws URISyntaxException, IOException {

System.setProperty("hadoop.home.dir", "H:\\hadoop-2.7.2");

//1.获取文件系统

Configuration configuration = new Configuration();

FileSystem fs = FileSystem.get(new URI(nameNode), configuration);

//2.执行操作 下载文件

fs.copyToLocalFile(false, new Path(filePath+"/test.txt"), new Path("D:\\"), true);

//关闭资源

fs.close();

System.out.println("结束!");

}

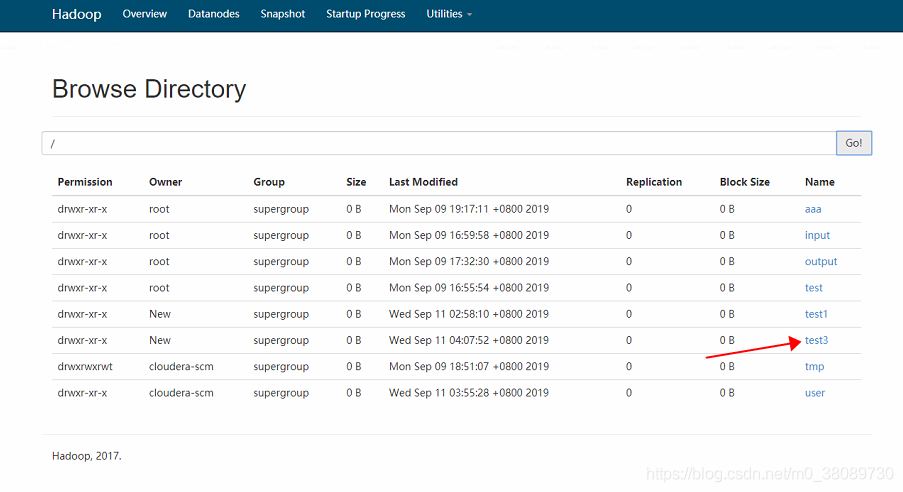

5. 查看结果

demo 的 github 地址

“https://github.com/OnTheWayZP/hadoop

”

作者:zapoul

来源链接:

https://blog.csdn.net/m0_38089730/article/details/100728639

更多推荐

已为社区贡献1条内容

已为社区贡献1条内容

所有评论(0)